KNOW YOUR WORLD. KNOW YOUR SELF.

UNPACK THE COMPLEXITY OF EVERYDAY LIFE

Search + Filter

TYPE

TOPIC

Featured

IDENTITY

AI

WAR

THE VOICE

FEMINISM

IMMIGRATION

CAPITALISM

{{featureTitle}}

-

Opinion + Analysis

Business + Leadership

The super loophole being exploited by the gig economy

9 APRIL 24

-

-

Opinion + Analysis

Society + Culture

Pleasure without justice: Why we need to reimagine the good life

3 APRIL 24

-

-

Opinion + Analysis

Society + Culture

Taking the cynicism out of criticism: Why media needs real critique

25 MARCH 24

-

-

-

Opinion + Analysis

Business + Leadership

The Ethics Institute: Helping Australia realise its full potential

11 MARCH 24

-

Opinion + Analysis

Society + Culture

Read me once, shame on you: 10 books, films and podcasts about shame

7 MARCH 24

-

-

Opinion + Analysis

Society + Culture

AI might pose a risk to humanity, but it could also transform it

27 FEBRUARY 24

-

Opinion + Analysis

Society + Culture

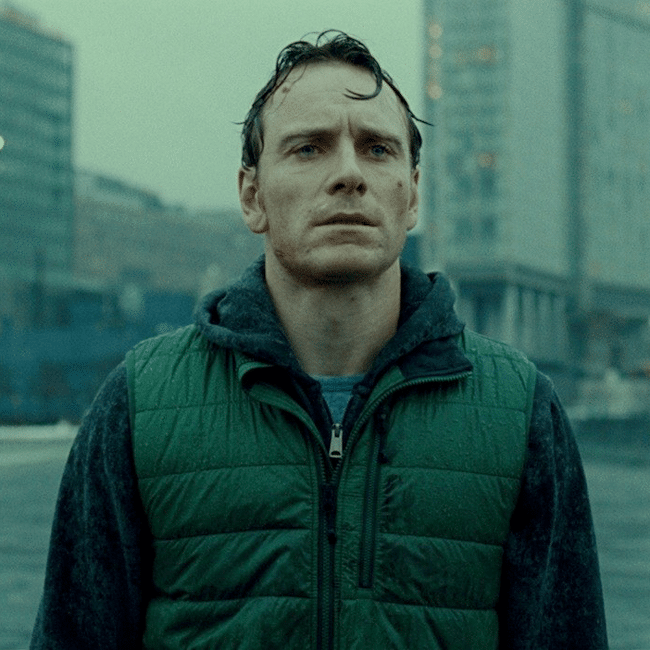

'The Zone of Interest' and the lengths we'll go to ignore evil

26 FEBRUARY 24